Certain computational approaches (coincidentally those that I find most interesting) have a hard time reaching a more mainstream linguistic audience. Not because the world is a mean place where nobody likes math, but because 1) most results in this tradition are too far removed from concrete empirical phenomena to immediately pique the interest of the average linguist, and 2) there are very few intros for those linguists that are interested in formal work. This is clearly something we computational guys have to fix asap, and I left you with the promise that I would do my part by introducing you to some of the recent computational work that I find particularly interesting on a linguistic level.1

I've got several topics lined up --- the role of derivations, the relation between syntax and phonology, island constraints, the advantages of a formal parsing model --- but all of those assume some basic familiarity with Minimalist grammars. So here's a very brief intro to MGs, which I'll link to in my future posts as a helpful reference for you guys. And just to make things a little bit more interesting, I've also thrown in some technical observations about the power of Move...

Minimalist Grammars

I have talked a little bit about MGs in some earlier posts already, but I never gave you a full description of how they work. For the most part, MGs are a simplified version of old-school Minimalist syntax before the introduction of Agree or phases. You have your old buddies Merge and Move, and lexical items carry features that trigger operations. There's several technical changes, though:- features have positive or negative polarity (rather than the interpretability distinction),

- feature checking takes place between features of opposite polarity,

- the features on every lexical item are linearly ordered and must be checked in this order,

- the Shortest Move Constraint (SMC) blocks configurations where two lexical item could both check the same movement feature on a c-commanding head --- for example cases where both the subject and the object may undergo wh-movement to the C head.

Merge

Here's a very small MG lexicon that we can use to build a simple tree with Merge. Of course we can have bigger lexicons than that, as long as the number of entries is finite.- Mary :: D-

- likes :: D+ D+ V-

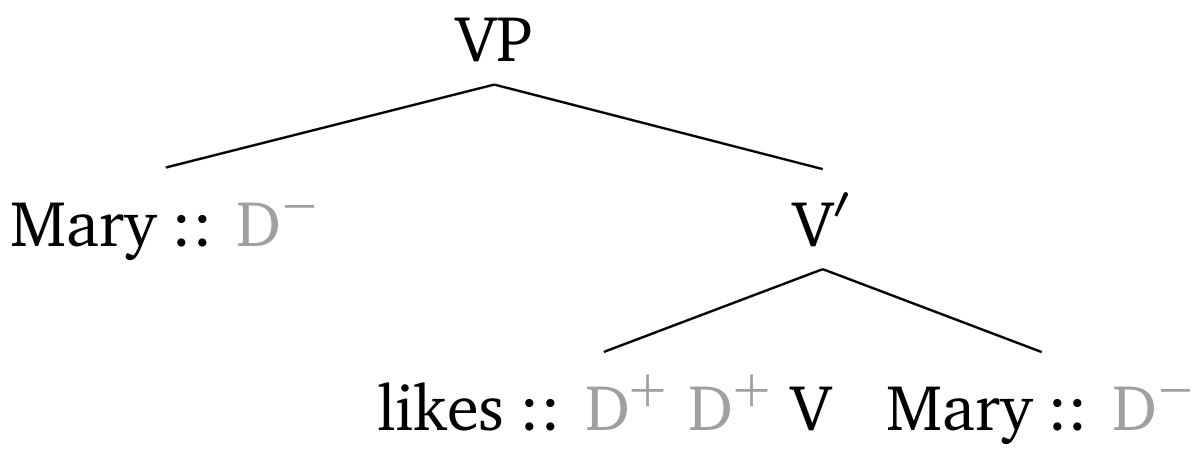

First we merge likes and Mary, which gives us the tree below. I'm using X'-style labels for interior nodes, but pretty much any labeling convention will do, including projecting no label at all. Also notice that checked features are grayed out.

Thanks to Merge, the D- feature on Mary has been checked, so that this instance of Mary does not need to undergo any syntactic operations anymore. But likes still has the features D+ and V-. Another Merge operation is needed to get rid of D+. So we merge another instance of Mary, resulting in the tree below.

The only remaining feature is V- on likes. If V is considered a final category by our grammar, then the tree we built is grammatical. If V is not final, then we have to continue adding new structure, but since there is no lexical item that could check V-, no further Merge steps are licit and the entire structure is ungrammatical.

Move

Now suppose that we want the object to undergo topicalization, yielding Mary, Mary likes. In Minimalist syntax, this is analyzed as movement of the object to Spec,CP. We can replicate this analysis by adding two more items to our lexicon:- Mary :: D- top-

- e :: V+ top+ C-

This tree is merged with the empty head, which is licensed by the matching V features on likes and the empty head.

The next feature that needs to be checked is top+, and lo and behold, the object still has a top- feature to get rid of. So the object moves to the specifier, the features are deleted, and we end up with the desired tree.

The only unchecked feature is the category feature C-, so once again the tree is grammatical iff C is a final category.

What would have happened if both the subject and the object had a top- feature? Which one would have moved then? Well, as I mentioned above, this issue cannot arise because the SMC blocks such configurations. The derivation would have been aborted immediately after Merger of the subject, which makes top- the first unchecked feature of the subject.

However, the SMC would not block a derivation where the subject rather than the object carries the top- feature and thus undergoes (string vacuous) topicalization.

It is also perfectly fine to have top- features on both the subject and the object as long as they are not active at the same time. Let's look at an artificial example since I can't think of a good real world scenario (if you have any suggestions, this is your chance to be today's star of our prestigious comments section).

Suppose that there are actually two topic positions, with TP sandwiched between the two. Furthermore, the object has a top- feature, whereas the subject has the movement features nom- and top- so that it has to move to Spec,TP first before it can undergo topicalization. In this case the two topic features are never active at the same time and the derivation proceeds without interruption.

Special Movement Types and Generative Capacity

Since Minimalist grammars put no particular constraints on movement except the SMC, they allow for a variety of movement configurations, including roll-up movement, remnant movement, and smuggling.Imagine a tree where UP contains ZP, which contains YP, which in turn contains XP.

- In roll-up movement, XP moves to Spec,YP, followed by movement of YP to Spec,ZP (which might also undergo roll-up movement to Spec,UP).

- In remnant movement (popularized by Richard Kayne2), XP moves out of YP into Spec,ZP before YP undergoes movement itself to Spec,UP.

- In smuggling (a term coined by Chris Collins3), YP moves to Spec,ZP, which is followed by extraction of XP from YP to Spec,UP.

| X | Y | Z | U | |

|---|---|---|---|---|

| roll-up | f- | f+ g- | g+ h- | h+ |

| remnant | f- | g- | f+ | g+ |

| smuggling | f- | g- | g+ | f+ |

Let's make this claim a little bit more precise (Caution: the discussion may reach critical levels of jargon density; proceed with care). Minimalist grammars that only use Merge have the same generative capacity over strings as context-free grammars, while the set of phrase structure trees generated by such an MG is a regular tree languages. We have also known for a long time that adding movement to MGs increases their weak generative capapcity to that of multiple context-free grammars (see the discussion in this post for some background), which also entails that not all phrase structure tree languages are regular.

Greg showed that if movement must respect the Proper Binding Condition (PBC), which requires that every non-initial element of a movement chain is c-commanded by the head of the chain, the increase in weak generative capacity does not occur --- MGs still generate only context-free string languages. Building on some recent results about the equivalence of Merge and monadic second-order logic (which was the topic of this lovely series of blog posts), one can also show that strong generative capacity is not increased by PBC-obeying movement. Now if you take a look at the three movement types above, you will see that remnant movement is the only one that does not obey the PBC. Hence remnant movement is the only (cyclic, overt, phrasal, upward) movement type that increases the power of MGs beyond what is already furnished by Merge.

Summary

A Minimalist grammar is given by a finite list of lexical items, each of which consists of a phonetic exponent and a string of features. Each feature is either a Merge feature or a Move feature, and it has either positive or negative polarity. The grammar generates every phrase structure tree that can be obtained by Merge and Move such that- all features have been checked off except the category feature of the highest head,

- said category feature is a final category,

- the SMC has not been violated at any step of the derivation.

Greg's thesis is also a good starting point if you'd like to know more about MGs, as are the first two chapters of my thesis and Ed Stabler's survey paper in the Oxford Handbook of Linguistic Minimalism.

- Kudos to Norbert for keeping his blog open to these formal topics and discussions.↩

- Kayne, Richard (1994): The Antisymmetry of Syntax. MIT Press↩

- Collins, Chris (2005): A Smuggling Approach to the Passive in English. Syntax 8, 81--120. Manuscript here↩

- Kobele, Gregory M (2010): Without Remnant Movement, MGs are Context-Free. MOL 10/11, 160--173. ↩

‘peak’ → ‘pique’

ReplyDeleteFixed. The internet is a better place now.

Delete"We have also known for a long time that adding movement to MGs increases their weak generative capapcity to that of multiple context-free grammars (see the discussion in this post for some background), which also entails that not all phrase structure tree languages are regular."

ReplyDeleteCould you amplify this point? Why aren't they regular .. are you distinguishing the derivation trees from the phrase structure trees?

Ah, an advanced issue :)

ReplyDeleteYes, derivation trees and phrase structure trees are different objects. The latter are the output structure of Merge and Move, whereas the former are the record of how this output structure was generated. All the example trees above are phrase structure trees. Derivations will be the topic of my next post.

There is a close connection between regular tree languages and CFGs. Intuitively, you can think of a regular tree language as the set of trees of a CFG where the interior (= non-terminal) nodes have been relabeled. Since the interior node labels are irrelevant for the string yield, every regular tree language has a context-free string yield. So a language whose string yield is not context-free cannot be regular.

To make the upcoming post even more enticing, derivation trees satisfy the no tampering condition, the extension condition, they do not explicitly represent the surface order, and, if you make *move* a binary function symbol whose second argument is the maximal projection of the mover (and thus a derivation DAG), implements the copy theory of movement as a `virtual conceptual necessity'. In short, derivation trees are formal objects which capture exactly the properties that Chomsky wants in a structure.

ReplyDeleteVery informative post, thank you, Thomas. Just one question about Greg's comment:

Delete"In short, derivation trees are formal objects which capture exactly the properties that Chomsky wants in a structure."

Do I understand correctly that the structures Chomsky is interested in [according to you [pl.]] are abstract structures, not biological structures?

@Christina: I feel like I have answered this question. Clearly, I have not answered it in a way that is satisfying for you. Would you please let me know exactly which part of the thing below which seems to me to be an answer is unclear/unsatisfying to you?

DeleteThe structures that (according to me) Chomsky is interested in are high level descriptions of regularities *in the world* (either behaviour, or the brain, or what have you). In much the same way that a chemist might be interested in the group structure of molecules under various transformations. The abstract algebraic structure of the group describes the fact that molecules behave the same way regardless of orientation. The chemist is not committed to there being some algebraic structure which is the thing of interest. The things of interest possess the algebraic structure (or are organized in such a manner that one can view them in terms of this algebraic structure).

Thank you Greg. Part of my confusion arises from all these analogies to chemists [or comet trajectories or bee navigation or what have you]. If i ask a chemist about the structures she is interested in she gives me an answer involving properties of chemical elements or compounds; she does not tell me anything about the growth patterns of lichens or the love life of snails.

DeleteSo can we please just stick to linguistic objects? I said Thomas' post was informative because it deals with what i consider relevant descriptions of linguistic structures. As did the comments. So i just wanted to make sure i understand correctly that Chomsky's interest really IS in abstract linguistic objects like sentences such as "John likes Mary" or "Mary likes Mary", in derivation trees and phrase structure trees, etc. etc. [I ask because when reading some of his recent publications I got a rather different impression].

So we are not allowed to use analogies to non-linguistic sciences to illustrate the methodological problems? And yet you use an analogy from chemistry to illustrate your objection itself? Oh well.

DeleteWhen you say "abstract" in "abstract linguistic objects" do you just refer to the type/token distinction? And do you think of a token of a phrase structure tree as being abstract?

"So we are not allowed to use analogies to non-linguistic sciences to illustrate the methodological problems? And yet you use an analogy from chemistry to illustrate your objection itself? Oh well."

Deleteseriously now, where do i say you're not allowed to use analogies? I said i find it CONFUSING when instead of a linguistic example one from chemistry is used to illustrate a linguistic point. If you are unable to appreciate my frustration you may want to read Chomsky's Science of LANGUAGE which contains a grand total of ONE linguistic tree 'diagram' [provided by McGilvray] but analogies to umpteen non-human species, astronomy, chemistry, physics... [well you can read a summary here: http://ling.auf.net/lingbuzz/001592

So if you have already an 'oh-well' experience when I use ONE analogy from chemistry you may appreciate how some of us [who want to learn about linguistics!] feel about the constant stream of analogies...

To remind you: I had asked a pretty straight forward question:

"Do I understand correctly that the structures Chomsky is interested in [according to you [pl.]] are abstract structures, not biological structures?"

I had expected a one sentence answer like:

[1] Yes Chomsky is interested in abstract structures. or

[2] No Chomsky is interested in biological structures.

So maybe you can explain to me how the analogy to chemistry is helpful here?

As for your question: I use 'abstract' to refer to abstract objects. So a token of a phrase structure tree is a concrete object [likely such tokens occur on some piece of paper, a computer screen etc.].

I think you are presenting a false dichotomy.

Delete[3] Chomsky is interested in abstract biological structures.

If all types, and properties of concrete objects are abstract, then all scientists are interested in abstract structures.

I would appreciate if you'd be a bit less hostile. I did not claim to give a complete list of possible answers. I just gave 2 examples [indicated by my use of 'like' and 'or'] - so there was no dichotomy, less a false dichotomy. I also said nowhere all properties of concrete objects are abstract. First tokens are not the only concrete objects. Second, when talking about tokens of linguistic objects: how could the colour of the ink used to write the sentence "Types are abstract objects" be an abstract object? Tokens can represent abstract objects but they can not be abstract objects.

DeleteI do not know what abstract biological structures are. Maybe you can explain it to me. Lets take one of Chomsky's early examples:

[1] John is eager to please

[2] John is easy to please

What is the abstract biological structure of these sentences? Does it differ from the abstract [mechanical??] structure of a computer that 'generates' these sentences? If so how?

As a p.s. re my frustration about excessive talk about chemistry [and other unrelated subjects] let me quote Norbert [I hope you won't object to that too]

"lots of linguistics is based on language data that practitioners are native speakers of, checking the “facts” can be done quickly and easily and reliably (as Jon Sprouse and Diego Almeida have demonstrated in various papers). ... we have ready access to lots of useful, reliable and usable data."

http://facultyoflanguage.blogspot.ca/2014/01/linguistics-and-scientific-method.html

So lets use some of these wonderful easily accessible data when illustrating linguistic insights - thank you.

"I do not know what abstract biological structures are. Maybe you can explain it to me."

DeleteSo here are some examples of abstract biological structures: genotypes, species, populations, phylogenetic trees. Theories of these abstract objects can help predict and explain the "behaviour" of particular individual plants and animals.

With regard to

Delete[1] John is eager to please

[2] John is easy to please

[3] The chicken is ready to eat

The claim that Chomsky would make is that we have some latent structures associated with these strings of words, one each for 1 and 2 and two for 3,

and that when an individual English speaker (me) hears a token of the sentence 3, then my brain will, depending on context, construct a psychologically real, neurally instantiated structure that is at a suitable level of abstraction equivalent to the sorts of structures that linguists write down in their text books.

So that is I think the strongest claim that the theories make. And some people might back off to something less committing.

That seems coherent to me even if you think, as I do, that our current theories of what these structures are are almost certainly false (the pessimistic meta-induction being very strong indeed in the case of linguistic theories).

An addendum to Alex's comment. An equally `literalist' view of the structures linguists assign could take the position that the process of interpreting these strings of words exhibits regularities which can be described in terms of the structures one typically associates with these sentences.

DeleteThank you both for these clarifications. It emerges that we use the term 'abstract object' differently and hence tend to talk past each other. In light of the fact that we just had the discussion about the many frustrating confusions re the use of "recursion" [depending on what is meant by the term] I think it is a good thing to clarify what we mean by 'abstract' before launching into further discussion.

DeleteSo there are at least 2 distinct meanings for 'abstract object'

[1]. abstract vs. concrete [or physical if you like]

[2]. general [=abstract] vs. particular

From what you say I gather you use 'abstract' meaning [2] while I had [1] in mind. Note that it is possible that an abstract concept [2] can refer to either concrete or abstract [1] objects. Now the Platonist holds that abstract objects [1] exist [are real] independently of human minds just as concrete objects exist; they just have fundamentally different properties. Is it correct to say that you do not believe that "genotypes, species, phylogenetic trees" are real in that sense [e.g. exist outside our theories]? You may in fact deny that any abstract objects [1] exist?

Further you seem to say that the process of abstraction [3] is relevant when we want to understand the difference between essential vs. accidental properties "my brain will, depending on context, construct a psychologically real, neurally instantiated structure that is at a suitable level of abstraction equivalent to the sorts of structures that linguists write down in their text books." So there are some accidental properties of tokens of linguistic objects [being instantiated in brains or maybe computers or being written down on paper and then there is some essence that remains the same no matter how the tokens are instantiated - and that is what we're really interested in]?

Lets not worry if what you sketched is a plausible view but assume it to be true. You seem to say there is some kind of 1:1 translation between "a psychologically real, neurally instantiated structure" [= a concrete object] and "the sorts of structures that linguists write down" [do you mean the tokens they write literally down or the types of which they write down a token?].

Now this is what I wanted clarification on: if it is also possible to imagine a computer that has an internal structure [i am unfamiliar with the lingo but confident you can fill in the blanks here] that instantiates the same 'sorts of structures that linguists write down' [for "The chicken is ready to eat"] it would seem that on that level of abstraction [3] we have abstracted away all genuine biological properties. So again my question: which are the abstract biological [as opposed to computer internal] structures you talked about earlier? [If a biologist claims genotype G causes phenotype P, he will also specify that G consists of the genes G1, G2, G3, ... G444 and then you can look at individual organisms and confirm they have G1, G2, G3 ... G444. If they don't you revise G. I just do not see that linguists do anything even remotely similar when they work on the 'sorts of structures they usually write down']

@Greg: yes I think the super strong commitment I gave is probably stronger than most people would be happy making nowadays, though back in the early days people did think that. For example, to me it seems pretty unlikely given what we now know about incremental processing etc. that there will be any moment in time when the whole tree is complete and present in memory.

Delete@Christina:

Delete"Lets not worry if what you sketched is a plausible view but assume it to be true. You seem to say there is some kind of 1:1 translation between "a psychologically real, neurally instantiated structure" [= a concrete object] and "the sorts of structures that linguists write down" [do you mean the tokens they write literally down or the types of which they write down a token?]. "

The problem you seem to be worrying about doesn't seem to have anything that is specific to linguistics.

Say I claim that water is H_2 O.

The symbols I write down are a notation of the molecular structure of water.

Do you also find this metaphysically puzzling? Does the type/token distinction matter here?

Point 2:

"if it is also possible to imagine a computer that has an internal structure [i am unfamiliar with the lingo but confident you can fill in the blanks here] that instantiates the same 'sorts of structures that linguists write down' [for "The chicken is ready to eat"] it would seem that on that level of abstraction [3] we have abstracted away all genuine biological properties."

Again this seems to be an argument that one could apply to other biological processes. So let's try a reductio of this argument.

Say, the way that DNA codes for amino acids. This code can be exactly implemented in a computer, thus abstracting away all biological and chemical properties. But it is indubitably a biochemical fact. So the fact that we can find a level of representation that abstracts away from the biological facts does not imply that the thing in question is not an abstract biological structure.

Thank you for the further clarifications Alex. You say:

Delete"Again this seems to be an argument that one could apply to other biological processes. So let's try a reductio of this argument.

Say, the way that DNA codes for amino acids. This code can be exactly implemented in a computer, thus abstracting away all biological and chemical properties. But it is indubitably a biochemical fact. So the fact that we can find a level of representation that abstracts away from the biological facts does not imply that the thing in question is not an abstract biological structure. "

Let me explain why I object to your analogy:

1. You are right that some code can be implemented in a computer. But [to borrow Chomsky's [in]famous quip against connectionists "Can your [connectionist] net grow a wing?'] as long as you just implement the code in a computer that computer can't produce [generate] any amino acids. Maybe it can run a virtual amino acid production line [kinda like SimCity] - for which the biological and chemical properties don't matter. But that is quite different from building a model that generates the same outputs [amino acids] as the original. I thought was Chomsky is interested in is the latter, not the former. And for that you can not entirely eliminate the bio-chemical properties of DNA.

2. The main reason I am uncomfortable with the analogy you suggest is that we do not know whether it is a legitimate analogy. In the DNA/Amino acid case we first discovered the bio-chemical properties THEN we wrote a code that allows to abstract away from them. In the language case we do not have the foggiest idea WHAT the bio-chemical properties are that correspond 1:1 to

[1] John is eager to please

[2] John is easy to please

[3] The chicken is ready to eat

so how can we 'abstract away from them'?

Since you love analogies so much, lets take one that should be uncontroversial: a chess computer. We have algorithms for such computers, some good enough to beat the best human players. We also know that the algorithm used by a computer is very different from what goes on in a human brain when a human plays chess. So if our Martian friend would just observe Kasparov playing against Deep Blue and then conclude both have the same abstract algorithm implemented he'd be incorrect. We know this independently, just as we know independently what bio-chemical properties have been abstracted away in the DNA algorithm case. But HOW do you know whether an abstract algorithm you develop for [1] - [3] above justifies the DNA analogy [as opposed to the chess analogy]?

To add to the above: The Platonist abstract [1] vs. concrete (physical) dichotomy, as I understand the dichotomy, has stopped being useful after Newton talked about "forces". Joseph Priestley shows this very nicely. After all, there is no obvious concrete counterpart to a "force", there appear to be only observable consequences. It is almost purely an abstract [1] concept. However, the concept has been very useful in understanding the world around us, and I don't see physicists running around too worried about where forces are in the "physical" world.

DeleteThe sciences, unlike some philosophers, seem to be pretty uniform in entertaining directly unobservable concepts/ideas as relevant/useful notions.

Having said this, I sometimes think even abstract [2], the Marrian sense of the term perhaps, is too myopic or constraining. For example, "abstract" forces interact with "concrete" objects. Now, is that force "general" or "particular"? I don't see the utility of the dichotomy here.

The real dichotomy, as I see it, is simply more vague: (1) there are ideas/concepts that help us make sense of the world or give us insight (2) and there are those that aren't useful in doing so.

[you can read more about Priestley's view in this collection - http://www.springer.com/philosophy/epistemology+and+philosophy+of+science/book/978-94-017-8773-4]

@Christina:

Delete1: I agree that you cannot shoot someone with a picture of a gun. But I don't think Chomsky is guilty of having this map/territory confusion.

2. This is a separate question: namely whether the specific theories of linguistic structure that Chomsky et al have put forward are correct or not, and whether the methodologies that they use justify the conclusions they draw. I think almost certainly not to both questions. This is independent of the question at hand.

(parenthetically: I think your history of the discovery of the genetic code is not quite right -- Gamow postulated a triplet code before the code was discovered. It wasn't entirely a bottom up analysis, but was guided by the assumption that it had to perform a coding function at a higher and more abstract level of analysis.)

Chomsky's remark about wings was very strange and I won't attempt to explain it (everybody screws up), but for example although I can't use a diagram of the motherboard of my computer to write papers or play games with, I can use it to perform useful tasks such as adding more memory or replacing the hard drive. So that, according to me, is what these abstract objects are, attempted guides to the real things that might someday be useful (and are now, surely, of extremely low quality).

DeleteIf a connectionist model of syntax could take as its learning input text with complex unembedded NPs but only very simple embedded ones, and produce as output text with the occasional fairly complex embedded, then I'd have to take it seriously.

@Alex: Thank you for the parenthesis. My cartoon was not meant to suggest 'a history of the discovery of the DNA code' - so I should have said we knew at least some of the important bio-chemical properties of DNA before it was possible to hypothesize about the code. And then further empirical work showed that details of Gamow's proposal where incorrect [I copy from wiki below since if may be informative for some of the BIOlinguists]:

Delete------------------------------------------

After the discovery of the structure of DNA in 1953 by Francis Crick and James D. Watson, Gamow attempted to solve the problem of how the order of the four different kinds of bases (adenine, cytosine, thymine and guanine) in DNA chains could control the synthesis of proteins from amino acids.[13] Crick has said[14] that Gamow's suggestions helped him in his own thinking about the problem. As related by Crick,[15] Gamow suggested that the twenty combinations of four DNA bases taken three at a time correspond to twenty amino acids used to form proteins. This led Crick and Watson to enumerate the twenty amino acids which are common to most proteins.

However the specific system proposed by Gamow (known as "Gamow's diamonds") was incorrect, as the triplets were supposed to be overlapping (so that in the sequence GGAC (for example), GGA could produce one amino acid and GAC another) and non-degenerate (meaning that each amino acid would correspond to one combination of three bases – in any order). Later protein sequencing work proved that this could not be the case; the true genetic code is non-overlapping and degenerate, and changing the order of a combination of bases does change the amino acid.

--------------------------------------------

This is in a nutshell how progress in the biological sciences is made: you start from the bio-chemical properties you KNOW at the time and you propose [on a more abstract level if you like] a theory that might explain something you do not already know. Then you test this proposal and if needed amend it. Not this "Galilean method" BS according to which you just 'set aside' whatever [seems to] refute your proposal.

Now correct me if I am wrong but to my knowledge Chomsky's work has never been informed by biology [bio-chemistry of the brain, connections between neurons etc. etc.]. The sentences we talked about are taken from what Chomsky calls dismissively E-language. From this E-language he has inferred all his different "proposals". Compare the Standard Theory to Minimalism: both are allegedly about I-language. Now our biology has not undergone a dramatic change between 1965 and 1995. What then justifies all the changes in Chomsky's theoretical proposals? Most certainly not any discoveries about out biology or biochemistry [like in the case of Gamow initial proposal and later revision] - so I just find it disingenuous to talk about abstract biological properties when biology never is even looked at...

Someone else will have to step in and defend Chomsky's methodology here because I am not prepared to since I think it is mistaken.

DeleteWhat we were arguing about was whether it is scientifically valid to investigate/posit phrase structures, and we all seem happy about this now.

All I get out of this whole back and forth is that Christina doesn't think the word "biological" is applied in the proper way. As Alex said, with respect to the sole substantial question - whether or not it is scientifically valid to posit phrase structures in explanations pertaining to the human capacity to produce and understand sentences - there seems to be agreement now: there is nothing incoherent about doing this.

DeleteIf you think Chomskyans shouldn't use Bio-Linguistics to refer to what they are doing because it doesn't square with what you feel comfortable calling "bio-Anything", that's nothing that merits an academic discussion.

What is important is to distinguish a stance such as Chomsky's (and, I take it, to some extent, even Alex C's) which takes grammars to be descriptions of some we-know-not-what-exactly-in-humans from a stance such as Postal's where grammars are taken to be merely abstract objects.

If you don't like Bio-Linguistics, call it C-Linguistics, or "The Wrong Theory", or what have you. But don't "let yoursel[ves] be goaded into taking seriously problems about words".

Thank you for your comments, Benjamin. I suggest biologists are the ones to decide whether or not much of this lose talk about 'biological properties or 'I-language' etc. by Chomsky [and some of his followers] is justified. Don't take my word for it, ask a biologist.

DeleteNow maybe you can tell me why you use the [derogative?] 'merely' when referring to Postal's view? Do you think that grammars are descriptions of abstract objects [as Alex says we all agree on that now] plus "of some we-know-not-what-exactly-in-humans" and hence the work that Postal does is not as sophisticated as the work you do? Maybe you can point to a few insights you have contributed that someone like Postal who merely works on the abstract structure of language could not possibly have contributed?

@Alex: You say "What we were arguing about was whether it is scientifically valid to investigate/posit phrase structures, and we all seem happy about this now."

This is not correct at all, what we were arguing about was whether "the structures Chomsky is interested in [according to you [pl.]] are abstract structures [or] biological structures" I had understood Greg to say they were abstract structures and you suggested: [3] Chomsky is interested in abstract biological structures. - I asked you to specify what is BIOLOGICAL and from the discussion I gather nothing. So if you do not maintain there is any meaningful biological content, there is indeed no disagreement.

Christina Behme, January 16, 2014 at 7:48AM: Part of the 'problem' might be the disrespect displayed in phrases like 'people like Everett'.

DeleteChristina Behme, January 25, 2014 at 2:35 AM: "Galilean method" BS

The hypocrisy boggles the mind.

@Christina: Why can we not be happy with the claim that Chomsky is interested in abstract properties of the mind/brain? (As I understand `abstract biological structures' to be intended to mean.)

DeleteClearly, these abstract properties are not themselves biological, but they are properties of biological things.

His recent emphasis on the `beyond explanatory adequacy' explicandum (why we use language but not gorillas), and the obvious evolutionary nature of the explicans, seems to more than justify the term `biolinguistics' -- we don't `just' want to account for properties of individuals or communities, but rather for the evolutionary development of these properties.

@Christina, just to finish this off so we don't need to have this discussion again. Putting to one side the correctness and insightfulness of Chomsky's theories, are you happy that it is scientifically valid to posit linguistic structures as abstract representations of regularities in brains etc,. without thereby becoming a Platonist?

DeleteIn reverse order: @Alex: as long as these theories actually are about regularities in brains they are certainly not platonist - as Paul will be the first to tell you. How the structures [of representations = tokens] in the brain are related to the structures linguists [like say Collins&Stabler] investigate is an open question. To use an example that is uncontroversially non-platonist: when a geologists studies crystal structure s/he will have some representations of those structures in his/her brain. But there is little reason to believe that these structures are identical [or even similar] to the structures of the object s/he studies. So in the case of linguistics you need an extra argument to establish that structures discovered [or postulated?] by C&S are identical to structures in brains. As long as one is aware of this there is no reason to call abstractions [2,3 in way above] not scientifically valid.

Delete@Greg: The moment i see that Chomsky takes work in biology seriously and focusses on data relevant to biology and to evolution I am more than happy to admit he is interested in abstract properties of the mind/brain. In the most recent book that was all about "Noam Chomsky's evolving ideas about the scientific investigation of language" [Lasnik - whom you may consider competent on such matters] I have seen no evidence that Chomsky has any interest in giving a scientific account for "the evolutionary development of [linguistic] properties".

@Thomas: My apologies, I was entirely unaware of your moral sensibilities. May i ask where they have been when Norbert called my review of 'Of Minds and Language" published in JL 'junk'? Or, since you probably don't really care about my feelings, where was your moral outrage about Norbert also writing "why do journals like the Journal of Lingusitics (JL) and review editors like Peter Ackema solicit this kind of junk?" - given that Peter Ackema had absolutely nothing to do with that review. I checked the comments at: http://facultyoflanguage.blogspot.ca/2013/07/inverse-reviews.html - you expressed no concern whatsoever at the public [and factually false] accusation by Norbert. Or take "At any rate, it’s clear that the editors of these journals have decided that there’s no future in real scholarship and have decided to go into show business big time" - even assuming my review was junk - does ONE bad review justify THIS evisceration of the editors of JL? If not, why did you never object? - so, with all due respect, I think as far as hypocrisy is concerned i have a long way to go to catch up with you...

"Now maybe you can tell me why you use the [derogative?] 'merely' when referring to Postal's view?"

DeleteWhere have I done that. I wrote that a view such as Postal's (and, I take it, yours) takes _grammars_ to be "merely" abstract objects, emphasizing that under such a view, a grammar is taken as nothing more than an abstract object.

" Do you think that grammars are descriptions of abstract objects [as Alex says we all agree on that now] plus "of some we-know-not-what-exactly-in-humans" and hence the work that Postal does is not as sophisticated as the work you do?"

Where did I even start spelling out a non-argument like that? Where does the "hence" come from? And when did I say that grammars are descriptions of abstract objects?

"Maybe you can point to a few insights you have contributed that someone like Postal who merely works on the abstract structure of language could not possibly have contributed?"

I haven't contributed anything --- such is the fate of the Philosophy and CompSci grad-student, I guess. I'm also not in the least bit interested in the abstract structure of language (honestly, I don't think I understand what the words "abstract", "structure" and "language" mean here), but I think we've had this before.

"To use an example that is uncontroversially non-platonist: when a geologists studies crystal structure s/he will have some representations of those structures in his/her brain. But there is little reason to believe that these structures are identical [or even similar] to the structures of the object s/he studies. So in the case of linguistics you need an extra argument to establish that structures discovered [or postulated?] by C&S are identical to structures in brains."

Except under a Chomskyan ontology (I know, it's incoherent, except, it seems the only way to understand the argument(s) for that is to already believe the conclusion) this "analogy" doesn't get off the ground. I feel like we've been at this point in the discussion before...

@Christina: I'm not taking issue with you calling something bullshit, I'm taking issue with the double standard: You like to play the passive-aggressive indignation card about the most harmless of remarks, but at the same time you engage in a fair share of mud slinging yourself. And that just doesn't mix.

DeleteI'm all for people speaking their mind freely without unnecessary self-censorship, so by all means, if you think something's bullshit and want to say so, the more power to you. But then don't complain when other people do the same, and more importantly, don't deliberately interpret what other people are saying in the most negative way possible just so that you can accuse them of being inconsiderate.

@Thomas: You said my hypocrisy boggles the mind - if this is not 'taking issue' I honestly do not know what would be...

DeleteNow lets have a look at your twisted interpretation of what I said in answer to your question below [which I took to be a genuine question - maybe that was a mistake]

"@everybody who has ever been part of the recursion paper war: May I ask why this debate has to be so complicated? @everybody who has ever been part of the recursion paper war: May I ask why this debate has to be so complicated? The only reason there is a discussion at all is that people like Everett object to the notion that recursion is an integral part of language. to the notion that recursion is an integral part of language."

First, you talk about a recursion paper WAR. One would have to assume that [in a war] you consider anyone who disagrees with your view about recursion as 'enemy'. You further claim "The only reason there is a discussion at all is that people like Everett object to the notion that recursion is an integral part of language...". This claim is made right AFTER Greg went through a great deal of effort to provide a long list of issues that require clarification in the WHRH paper [which was written to clarify HCF]. You do not even hint at the possibility that the conflations and confusions Greg lists could provide an answer to the question "why is the debate so complicated?" Instead you claim THE ONLY REASON there is a discussion at all is that people like Everett object to the notion...

So if, according to you, I interpreted DELIBERATELY [you do not even consider i could have made a mistake] in the MOST NEGATIVE WAY what you said [my interpretation was that you disrespect Dan] - then please enlighten us: WHAT was the way in which you meant what you said? Did you mean to express your admiration for Everett's work in this way? Why did you not at least consider the possibility that the many problems Greg listed contribute to what according to you is a "war" but placed the responsibility for same exclusively at 'people like Everett"?

@Christina: You said my hypocrisy boggles the mind - if this is not 'taking issue' I honestly do not know what would be...

Deleteand I am taking issue, and I explicitly explained above what I'm taking issue with.

As for the Everett incident, thanks for illustrating exactly what I'm talking about. First a linguistic tidbit: if you go back to that thread, you'll also see the phrase people like Smolensky is used in another comment, with no negative connotation whatsoever. So reading people like Everett in a derogatory fashion is already one instance of you jumping to conclusions. Second, my qualm was about the fact that generativists are engaging in a huge empirical debate with Everett when the right answer would be that his results are interesting, but have no bearing on the recursion issue. So this complaint wasn't at all about Everett or the merits of his work but rather about the puzzling failure of generativists to point out that Chomsky's claims about recursion are not at odds with Everett's findings.

For exhibit #2, please scroll up a little bit and look at Benjamin's comment, who sounds rather surprised by your negative interpretation of what he said about Postal. Precisely because what he said about Postal is really innocent. Keep in mind that Benjamin is a computational linguist. What axe would he have to grind when it comes to Postal? And personally, I have to admire how politely he replied to your passive-aggressive inquiry about his contributions to the field.

Then we had the case of Greg saying that he wasn't sure about some aspects of Postal's work, which you turned into a in dubio pro malo double whammy by inferring that

1) Greg doesn't know Postal's work at all, and

2) this is due to some big Chomskyan anti-Postal conspiracy in his field.

And as a reminder, Greg, just like Benjamin, is a computational linguist, a field where most people know little about Chomsky's theories and happily use other frameworks such as HPSG, LFG, CCG, TAG, or just plain old phrase structure grammars.

So I stand by my initial assessment, you're easily offended while having a rather antagonistic discussion style yourself. There's nothing I can do about it, and I'll still join discussions with you if I think they touch on an interesting point (since I mostly care about technical issues that doesn't happen very often), but I will not expend any energy on debunking any farfetched misinterpretations.

Thank you for providing another delightful example of your inconsistencies:

Delete"and I'll still join discussions with you if I think they touch on an interesting point (since I mostly care about technical issues that doesn't happen very often),"

Our last discussion you initiated with:

"Christina Behme, January 16, 2014 at 7:48AM: Part of the 'problem' might be the disrespect displayed in phrases like 'people like Everett'.

Christina Behme, January 25, 2014 at 2:35 AM: "Galilean method" BS

The hypocrisy boggles the mind."

Just what is the technical issue you cared about here? Do not bother with an answer - only someone with an antagonistic discussion style could wonder.

But maybe in your eagerness to cut me down to size you should not go so far and imply Greg and Benjamin "know little about Chomsky's theories" because they are computational linguists - that is really unfair to both of them.

not often != never

Deleteyou should not go so far and imply Greg and Benjamin "know little about Chomsky's theories" because they are computational linguists - that is really unfair to both of them.

exhibit #3

@Christina (Jan 25, 12:43): How the structures [of representations = tokens] in the brain are related to the structures linguists [like say Collins&Stabler] investigate is an open question.

DeleteThat is true. The way C&S (and Chomsky) investigate the structure of representations in the brain is indeed very indirect. They first try to understand what properties those representations in the brain must have, in order to give rise to the visible manifestations they do (linguistic behaviour in its widest sense). To do that, they idealize from these visible manifestations, and partition the set of types (of tokens) into `grammatical' and `ungrammatical'. (This is a pedagogical simplification.) Under their idealization, tokens of a grammatical type are attested, and tokens of ungrammatical types are not attested. They then try to account for this partition of the possibilia. This is what a grammar is, a description of a partition. This is a problem that the brain is solving; from among the possibilia, only instantiating the grammatical ones. (Again, there is an idealization at work here.) Say now we have a nice description of a partition. This describes at an extremely abstract level, something that the brain is doing. We might want to describe this same thing at a slightly less abstract level. So we ask, how could any computing device actually compute whether something is in one block or the other of the partition. This is the same problem that the brain is solving, but slightly less abstract. Once we have a nice solution here, we can again become slightly less abstract and ask how this algorithm is realized in the brain itself. This is the program that C&S are embarking on. Certainly you would agree that it is at least coherent. The reason that so little connection or reference to the actual brain is made is because people are still working primarily on the problem of describing the partition. This is why I think it makes very little difference whether one thinks one is studying some abstract (platonic) realm of sentence types, or that one is studying some concrete behaviour at a high level of abstraction and idealization -- one will at the first step above be doing functionally the same thing.

To use an example that is uncontroversially non-platonist: when a geologists studies crystal structure s/he will have some representations of those structures in his/her brain. But there is little reason to believe that these structures are identical [or even similar] to the structures of the object s/he studies. So in the case of linguistics you need an extra argument to establish that structures discovered [or postulated?] by C&S are identical to structures in brains.

I don't understand what you are getting at here. I wonder if it has something to do with the various readings of `knowledge of language'? C&S are not interested in what goes on in their heads when they try to understand what goes on in your head when you use language.

@Thomas: If we are playing "what's your favourite bit of Christina hypocrisy", then here is my entry:

Delete**

Christina Behme February 13, 2013 at 1:29 PM

Lets leave the insults in the coat roam, shall we? They are especially unbecoming when one has one's foot as far in the mouth as you do.

**

In consecutive lines!! The mind boggles, indeed.

@Greg: this touches on a point where I may disagree with you slightly.

DeleteThe methodologies that one *should use* differ in the two cases even if the methodologies that are actually used are very similar. For example, if you are a platonist there is no need to worry about computational efficiency or learnability, though one might worry about say simplicity. Given that most linguists -- not you and not me of course -- don't care about these two factors, but do care a lot about simplicity, one can see how the argument can be made that what they are really doing is Platonist.

Of course I think linguistic Platonism is intellectually indefensible for other reasons, (the epistemological problems are insurmountable).

Thanks for the comments, Greg. I have no reason to doubt that C&S are interested in the partitioning project you describe. As you put it nicely as long as one is working on what you call the first step it makes little difference whether one is a Platonist or not. I do not think there is any incoherence in attempting to find an algorithm that can generate only the grammatical set but I think it is misleading to call this work BIOlinguistic when, as you say, biology really is not of concern at this point.

DeleteNow I disagree re Chomsky's interest in the partitioning project. If we take seriously what he says in some of his publications he thinks this is a rather useless project. Take for example:

"Weak generation has no clear meaning; strong generation has a clear meaning – it’s a biological phenomenon. There is a class of structured expressions and you can figure out what they are. Weak generation is highly problematic. First of all there’s no obvious interest: there’s no reason why we should be interested in an impoverished version of the object that’s generated. It’s uninteresting as well as unclear what the class is; you can draw the boundaries anywhere you want. We talk about grammatical and ungrammatical but that’s just an intuitive distinction and there’s no particular reason for this; normal speech goes way beyond this. Often the right thing to say goes outside of it; there are famous cases of this, like Thorstein Veblen, a political economist who was deeply critical of the consumer culture that was developing a century ago, who once said that culture is indoctrinating people into ‘‘per- forming leisure.’’ We understand what that means and that’s just the right way to say it but from some point of view it’s ungrammatical. Rebecca West was once asked some question about possible worlds, and she said that ‘‘one of the damn thing is enough.’’ We know exactly what that means and that it’s a gross violation of some grammatical rule, but there isn’t any better way of saying it; that’s the right way to say it.

Are such things proper strings or not proper strings? Well, it makes no difference what answer you give – they are what they are, they have the interpretation they have – it’s given to you by the linguistic faculty, but they’re all strongly generated. That’s the only real answer." [Chomsky, 2009, 388/9]

In this passage Chomsky is pretty clear about the partitioning project: "It's uninteresting as well as unclear what the class [of grammatical expressions] is; you can draw the boundaries anywhere you want." - I do not agree with this claim but here it is not important what I think Chomsky should be interested in but what he says he is [and more importantly is not] interested in.

You say "C&S are not interested in what goes on in their heads when they try to understand what goes on in your head when you use language." This comment suggests that implicitly you assume Chomsky's ontology to be incorrect. If it is true that ONE mutation installed LF in one of our distant ancestor's brains and we all inherited this LF without any change [remember the Argument from the Norman Conquest] then "what is going on in my head =LF" has to be identical to what is going on in C&S's heads when we strongly generate expressions. The fact that I may make more grammatical mistakes then they do is utterly irrelevant on Chomsky's - performance can and does differ view but competence does not. If you disagree with this and maintain that "C&S are not interested in what goes on in their heads when they try to understand what goes on in your head when you use language" you and i agree.

To be clear: my objections are to claims as those in the passage I cite above NOT to the project you outline.

@Alex: sorry i only saw your comment after I had posted my reply to Greg. I may be wrong about this but i understood Greg saying that at the moment we have no algorithm that can do the partitioning:

Delete"The reason that so little connection or reference to the actual brain is made is because people are still working primarily on the problem of describing the partition."

Now I think Greg is right: that early in the game there is no difference between platonist and non-platonist attempts. The differences you allude to come into play once we have an algorithm. The platonist can just happily use this algorithm while the biolinguist needs to do the extra step and figure out whether/how this algorithm could be implemented in a human brain [and if it can how it impacts say learnability - that is overcome the challenge by Ambridge, B., Pine, J. M., & Lieven, E.V.M. (in press). Child Language Acquisition: Why Universal Grammar doesn't help. Language. - just a reminder that platonism is not the only challenge to the Chomskyan project]

@Benjamin: apologies I missed your comments in the flood of TG posts. First i would like to emphasize that i was asking questions NOT attributing any views to you. Now as philosopher familiar with the Platonism debate you know of course that we have seen decades of denigration of this view from Jerry Fodor's [1981, 159], “nobody is remotely interested in [platonism]” to Iten, Stainton & Wearing's [2007:237] allegation that Katz's argument for Platonism is "supported by abstruse philosophical reasoning". You will remember that Norbert called Postal's platonist position 'silly' here: http://facultyoflanguage.blogspot.ca/2013/03/going-postal.html [just to be clear, Norbert did not call Postal or any of his not-to-platonism-releted-work silly only the platonist position as he understands it].

DeleteGiven this widespread dismissive attitude towards Platonism [to which i do not recall you ever objecting] it was not entirely implausible that you used 'merely' to express the same kind of disapproval. Still I did not attribute the view to you but ASKED if this is your view. Now your reply makes it clear that you found my questions offensive - so apologies for this - I did not mean to offend you. And I am very glad to learn that, unlike many on this blog [and in the wider linguistic community] you do not only appreciate Paul's contributions to linguistics but also take his platonist position seriously [which incidentally is not the same as agreeing with it].

@Christina: "The platonist can just happily use this algorithm while the biolinguist needs to do the extra step and figure out whether/how this algorithm could be implemented in a human brain [and if it can how it impacts say learnability..."

DeleteThat's a nice summary of the biolinguistic project - do you honestly find it incoherent, just because there's an "extra step"? If the correct algorithm has not even been discovered, it's a bit odd that you're so outraged that biolinguists haven't made any concrete proposals about biological organs at this stage. As you say, the algorithm has to be much better understood first. Then, the biolinguistic project will have the task of figuring out implementations, in several stages, from the abstraction to the concrete. But note that, presumably, the platonist will have to do the same, if s/he wishes to understand how the epistemological problem is solved. The difference being that the platonist's task is likely to be insurmountable.

By the way, since you do not - from what you've previously said, and reasonably so - subscribe to platonism, it really does looks like this debate is simply terminological. It's only about the 'bio' in 'biolinguistics' (and your repeated references to "BIOlinguistics" do belabour this point). That's a lot of energy wasted for what seems like such an inconsequential matter, especially given the above.

@AlexC: Yes, I agree with you; I just wanted to try to show that, despite vituperous rhetoric, much (if not all) of these debates don't impact the daily lives of the working syntactician. I also find platonism an indefensible position. Perhaps because of that, I do not believe that Postal really intends to adopt platonism. I imagine he might be exaggerating to try to countervail what he sees as much sound and fury signifying nothing from the other side; trying to say `hey, you can say what you want, but what you're really doing in your daily lives is more like this'.

Delete@Christina: (I think the weak generative capacity issue is one of the few where Chomsky is just dead wrong.) What Chomsky is doing there is proposing a hypothesis. The hypothesis is that the best description of what people actually do will come from treating all possible ways people understand sentences as being governed by the same abstract process. (The grammar should assign some structure to every string of words, structures being proxy for meanings etc.) This is just a refinement of my gross simplification above; his project is the same as the C&S project, with just this extra hypothesis. I personally think a more productive hypothesis is to treat some sentences we can comprehend as not being processed in the same way as others; `dog bite boy' is comprehensible, but I would rather explain this in terms other than that it is assigned a structure by the grammar in the normal way.

@Christina: Oops; there are algorithms for computing this for many grammatical frameworks, minimalist grammars included.

Delete@ doonyakka: I am afraid Alex C. would not approve if I were to go one more time over the arguments Postal gives for Platonism. So just very brief: the extra step is NOT the problem. If you are interested in the arguments for Platonism I recommend: http://ling.auf.net/lingbuzz/001607 as a good starting point. I have also explained several times why i object to calling something that involves no reference to biology bio-linguistics - so just one point [again]: it shows very little respect for the work of biologists to engage in these pretentious, yet empty claims. You probably would find it odd if I would claim to have made great discoveries in 'chemo-linguistics' or maybe 'astro-linguistics' that have nothing to do with chemistry or astronomy. For more details why I think linguists should distance themselves from the many pretentious claims Chomsky has made recently have a look at: http://ling.auf.net/lingbuzz/001592

Delete@Greg: Apologies that I misunderstood your statement - so you are saying there is at least one algorithm known that partitions all grammatical expressions of English from the ungrammatical expressions?

You are of course free to find platonism indefensible. But it does not seem to follow that Postal has been dishonest numerous times when he has in print adopted and defended this position. Don't you think it makes very little sense to exaggerate one's commitment to a position that is as hugely unpopular as Platonism if one's goal is to 'countervail' Chomsky's position? Or, given how persistently Chomsky has ignored any criticism by Postal would it make any sense to continue the ruse if Postal really is not committed to Platonism? If you think Postal is dishonest, would he not reason that he'd stand a much better chance to achieve his goal if he pretended to defend a more popular position? [maybe join forces with Tomasello or Everett or - take a pick anything would make more sense than platonism]. I hope you did not want to attribute this combination of stupidity and dishonesty to Postal.

@Christina: I should not have attributed this reading to Postal; I stand corrected. I should have said that if this is what were meant I would find it a much more reasonable position.

DeleteAs for the algorithms, there are algorithms that partition the space of expressions (into grammatical and ungrammatical) for every way of partitioning them that can be given as a minimalist grammar. (The same holds true for richer formalisms, such as LFG with an offline parsability constraint). Whether one of these is `right', I cannot say.

For the entertainment value of chucking a random brick at an argument for Platonism, consider this for the 'type argument':

Delete"Consider the much-cited example 'Flying planes can be dangerous'. Generative grammarians who discussed this example were not talking about tokens on their blackboards. If they had been, they would not have been talking about the same thing. But if different grammarians were talking about different things in such circumstances, grammar would lack a common subject matter."

KP1990:523.

But there is no single abstract mathematical object that they could have could have been talking about prior to the recent invention of 'univalent foundations' a few years ago, since any mathematical representation of a linguistic structure has a proper class of isomorphic functional equivalents (for example trees can be formalized in many different ways, using pretty well anything as nodes and arcs or representatives thereof).

Whereas, even if the universe is open, there is probably only a denumerale infinity of actual blackboard representations of grammatical structure trees in it. Even now, I wouldn't be surprised if zero linguists really understood the tools that can now be used to turn isomorphism into identity (I certainly don't). As best as I can make out, Postal accepts mathematical representations as Platonic objects that, for him, linguists are supposed to study. But they don't provide a unique object of study. This is not a problem if you are using them as a tool - having 3000 functionally identical copies of a single lens is not a problem, as long as you have the one(s) you need.

Christina wrote: "[maybe join forces with Tomasello or Everett or - take a pick anything would make more sense than platonism]."

DeleteThis is something that is puzzling me --- how is, from an "ontological" point of view, Everett's or Tomasello's position any more respectable than the "Chomskyan" one? If the alleged incoherence is completely independent of a nativist or domain-specific assumptions, how is, say, a Cognitive Grammar approach less philosophically suspect than a Generative Grammar one?

@Avery: you may of course do whatever you find entertaining but if the brick is meant to have any effect on the arguments for platonism it ought to be chucked at least in its general direction. Yours seems so far off that I must miss something. You say:

DeleteBut there is no single abstract mathematical object that they could have been talking about prior to the recent invention of 'univalent foundations' a few years ago, since any mathematical representation of a linguistic structure has a proper class of isomorphic functional equivalents (for example trees can be formalized in many different ways, using pretty well anything as nodes and arcs or representatives thereof).

This argument seems to give epistemology priority over ontology: before we had discovered [invented?] 'univalent foundations' there could not have been a single mathematical object? Platonists hold abstract mathematical objects exist INDEPENDENTLY of us - so whatever we discover or invent has no impact on them. But, since you deny platonism lets take a concrete example: you seem to say before we had figured out that gamma ray bursts "consist of a narrow beam of intense radiation released during a supernova or hypernova as a rapidly rotating, high-mass star collapses to form a neutron star, quark star, or black hole" supernovas, quark stars and black holes did not exist?

Your blackboard argument [if I understand it correctly] seems to be an argument for nominalism. Even people who utterly dislike Postal/Katz can look at Chomsky's arguments against nominalism - they seem sound to me.

I did not want to discuss platonism again but since you brought it up I like to say something about the reason Alex C [and i think implicitly Greg as well?] has given for rejecting it: "linguistic Platonism is intellectually indefensible [because] the epistemological problems are insurmountable". Assuming this argument to be true it seems to suggest unless we can know something it cannot exist - we cannot know platonic objects hence they cannot exist. Again we let epistemology trump ontology. Yet, at any given time in our history there have been things people thought we could not possibly know. But isn't this what science is about - uncovering things we never knew before?

This brings me to a point Katz made long ago: Alex is probably right when he insists that everything knowable has to be discovered with the methods of natural [physical] science. But it is the claim of the Platonist that abstract objects are not of the same kind as concrete objects - hence not to be studied with the same methods as concrete objects. Now you may call 'irrational' and 'occult pseudoscience' at such a suggestion or you may look at the work Postal actually does - it does not look irrational to me.

Further, it seems to be the case that by far not all epistemological problems have been solved for any non-platonist linguistics. Chomsky claims by now linguistic creativity could be one of 'the mysteries of nature, forever hidden from our understanding' - should we therefore abandon the natural sciences as well? I know how you will answer this rhetorical question. But then why single out linguistic platonism? It is in a way ironic that Katz and Postal have paid more attention to biology than many biolinguists: the fact that [according to them] languages have properties that objects generated by any biological organ could not have led them to conclude that the Chomskyan paradigm must be incorrect. So their opposition to biolinguistics is grounded in ontology [the way the world IS] not in epistemology [what we [currently] can know about the world]. I think, if one wants to prove K&P wrong, it is at least worth taking them on on these grounds instead of insisting that epistemology ought to trump ontology.

@Benjamin: none of the other views invoke the existence of abstract objects. So from an ontological point of view all the people I listed agree that concrete [physical, 'natural'] objects are the only objects that have real existence and can be studied by science. This does of course not entail that their theories are uncontroversial. But, so to speak, they all play on the same [ontological] team against the Platonist.

DeleteIs that true? Granted, I'm not very familiar with the specific formal proposals of Tomasello's or Everett's (despite having read books by either), but standard Construction Grammar approaches also involve talk of structured representations, no? If you have any reference where there is a proper formal treatment by Tomasello or Everett that illustrates the crucial difference, that'd be greatly appreciated.

DeleteOut of interest, what about a view such as the one expounded by Jackendoff in his "Foundations of Language"?

@Benjamin: Keep in mind there is a difference between structured representations and the objects they are representations of. In the case of linguistic objects the ontological status of the representations is not under dispute [Postal accepts that tokens of linguistic objects are physical/biological objects]. The platonist/non-platonist dispute is over linguistic objects themselves: are they abstract objects as Katz and Postal claim or physical objects [like brain states or objects generated by brains or some other type of physical/natural object].

DeleteWhat Alex alludes to is the question: how can we know about linguistic objects [or that our representations of them are correct]? If linguistic objects are some kind of concrete objects we can [in principle] learn everything there is to know about them with methods of the natural sciences. If the are abstract [=platonic] objects we needs different methods and so far we do not know what kinds of methods would be reliable [remember how long it took for humans to develop reliable scientific methods - and some philosophers claim even our current methods are not terribly reliable]. So the epistemological worries Alex mentions are certainly valid.

Now obviously if someone hypothesizes linguistic objects are a certain kind of natural object s/he can be incorrect. This is what the dispute between someone like Chomsky and Tomasello is about: Chomsky claims there exist some language specific brain structures, Tomasello denies this and claims there are only 'general purpose brain structures that are recruited for linguistic tasks' [to be clear this is a very crude cartoon]. It is possible [in principle] to test these competing hypotheses with the methods of the natural sciences; you "look at" brains and either find some special structures or you do not find any. Of course in reality it is way more complicated than this [our current methods for 'looking at' brains are still very crude] but it is at least in principle possible to decide these disputes exclusively with methods of the natural sciences. Another dispute is about the details of the internal structure of linguistic objects. Again, if you hold linguistic objects are natural objects you can ultimately hope to uncover their internal structure with methods of the natural sciences.

I do not know how much Tomasello worries about ontology besides being firmly committed to a naturalist ontology [he holds we invented numbers, Chomsky seems uncommitted on this issue] and as far as I know Ray Jackendoff is also a committed naturalist [but you have to check with him].

@Christina If linguistic objects are platonic (is this what you claim? or are you arguing for a position you don't believe in just for fun?) then the epistemological problem goes very deep -- if they are causally inert objects that exist outside of space and time, then no scientific methodology can tell us anything about them. Thus any knowledge we have must come from divine revelation, or some a priori reasoning, and we probably don't like the first being good post enlightenment thinkers, nor the second because many linguistic facts are clearly historically contingent. So while it seems like you might be able to rescue mathematical platonism using the second fork, .e.g via plenitudinous platonism, linguistic platonism is dead in the water.

DeleteThis is a different question from the question of how we can investigate linguistic facts if we take them to be neurally/biologically real. Which is a methodological question which raises no particular epistemological issues beyond the standard scientific ones that affect all scientific theories that posit unobservable entities.

"The platonist/non-platonist dispute is over linguistic objects themselves: are they abstract objects as Katz and Postal claim or physical objects [like brain states or objects generated by brains or some other type of physical/natural object]."

DeleteI got that much, but thanks. I still doubt that any of the people you point(ed) out as having some kind of different approach would qualify as holding a view of linguistic representations (the ones attributed to speakers of a language) as representing something like an abstract linguistic entity (and that these things enjoy their existence fully independently of speakers); I may well be wrong, so if you have any suggestive reference, I'd love to see it. Then again, it doesn't really matter that much.

@ Benjamin: the comment Alex C. just posted demonstrates much better than i did why no one [in his right mind] would pretend to be a platonist if his goal were to challenge the credibility of Chomsky [or people working under his framework]: no matter how hostile the debate against Everett or Levinson or Tomasello has been no one ever accused them of depending on divine revelation or a priori reasoning. So it would be much more likely that someone who IS a platonist never admits this in public - so s/he is not exposed to this kind of ostracisation vs. someone who wants to be taken seriously [maybe we can all agree postal wants to be taken seriously?] pretending he's a platonist. This was the only point I was making and Greg seemed convinced and has retracted his argument.

DeleteThe fact that something exists independently of speakers does not entail it is an abstract [platonic] object. The phrase 'abstract linguistic entity' is used to mean several different things as we discussed earlier in this thread. I am not familiar with Tomasello's most recent publications - so if you are really interested in his current view about the nature of linguistic objects it is probably best to e-mail him and ask.

@Alex: I am neither a platonist nor do I 'argue for a view i do not believe in just for fun' [you accused me of false dichotomy construction earlier :) I think in light of how little is currently known about the nature of linguistic objects it is a good idea to remain open minded. I have challenged Paul extensively with arguments against platonism [including those you mention] but was not able to refute him - so I am not in a position to say he is wrong.

@Christina: My point wasn't so much about Postal's platonism, but rather about the fact that, given that you are not a platonist, *your* arguments against biolinguistics boil down to your dislike of the prefix "bio-". Because of this, you say (in so many words) that Chomsky is a charlatan for play-acting at biology and linguistics.

DeleteYou also claim that Chomsky has become somewhat of a laughing stock amongst biologists in the know, for his outdated and outlandish views. As you have read every publication of Chomsky's, I presume you are familiar with his (1993) monograph "Language and Thought", in which he discusses matters of language, biology, and reduction with (amongst others) James Schwartz, Prof. of Neurology and Columbia University. I won't go into specifics due to space, but Chomsky's arguments on pp 79-89 seem (to me at least) to be of far greater subtlety than your accusations of intellectual fraud would warrant. In any case, I'd be interested in your thoughts on that discussion.

As I understand it, your criticism is solely that there's nothing concretely biological (yet) about biolinguistics. To me, this is an irrelevance, as well as a misreading of the biolinguistic enterprise, and I'm not even a biolinguist.

@doonyakka: I am afraid if you want to interrogate me you have to reveal your identity, as virtually everyone else on this blog does.

DeleteIn the meantime just two things:

1, You are incorrect: one does not need to be a platonist to make the argument that the claims Chomsky makes about the ontology of his 'program' are internally incoherent.

2. You seem to attribute to me several claims I do not remember making. Especially the term "Chomsky's playacting at linguistics" seems to be borrowed from Paul Postal: http://ling.auf.net/lingbuzz/001686 - so you may want to direct some of your questions at him.

To avoid further mix ups please also provide quotes of what I said in future interrogations.

@Christina: I think my brick does go in the general direction of the target and an fact hits it, on the basis of the first full paragraph of KP1990:523 because the problem with the blackboard sentences and diagrams was that they were supposed to be nonunique, while the alleged Platonic objects didn't have that problem, but, at the time, the only Platonic objects available for concrete discussion were mathematical structures which also have the nonuniqueness problem. So 20 years on people come up with a system where this can be fixed by adopting an axiom, but people who don't have a full grasp of the math might not even want to do that on mere faith. Plus (the brick hitting the target), if you regard the diagrams and math structures as maps, the uniqueness problem is a non-issue.

Delete@Avery: lets proceede on the most charitable interpretation: you and I [and Katz&Postal] are talking about different things. For that reason your brick can't hit the target. So let me explain again what Platonists talk about when they use type [or abstract object] Take: